High Availability Installation

Overview

This installation guide shows the steps required to configure HAProxy connection failover for:

- Primary / Secondary database connectivity.

- Flow Editor Apache Jarvis API connectivity.

This example runs through the installation of HAProxy load balancing and failover for 2 different node types:

- SMS nodes.

- External FE nodes.

Overall Installation Steps

The high-level steps for installing and deploying HAProxy load balancing and failover are:

- Install the HAProxy packages.

- Install the N-Squared PostgreSQL auxiliary packages.

- Configure HAProxy for all nodes.

- Configure Jarvis applications to utilize HAProxy load balancing and failover.

This example only shows the deployment of a single HAProxy configuration for the primary database instance running on port 5432 this will need to be expanded for each additional PostgreSQL instance deployed.

External FE nodes will only require a single HAProxy configuration.

Install HAProxy (SMS Nodes)

Install the required packages:

dnf install haproxy n2pg-aux

Enable the installed packages:

systemctl enable haproxy

systemctl enable pgsqlchk

Install HAProxy (Flow FE Nodes)

Install the required packages:

dnf install haproxy

Enable HAProxy:

systemctl enable haproxy

Configure Health Check User (SMS Nodes)

In order to query the database and determine its read/write/primary/secondary status a user will need to be created on the primary SMS node.

This user will be configured and utilized by the pgsqlchk service when checking the database state.

su - postgres

psql

CREATE USER health_check_user LOGIN PASSWORD 'health_check_user';

Configure PSQLCHK (SMS Nodes)

The n2pg-aux package deploys a service which will allow querying of the active read/write primary within a PostgreSQL cluster.

Edit the configuration as required:

nano /etc/psqlchk/config.json

Defaults to:

{

"database_host": "localhost",

"check_repmgr_status": true,

"repmgr_config_file": "/etc/repmgr/13/repmgr.conf",

"repmgr_binary": "/usr/pgsql-13/bin/repmgr",

"health_check_user_password": "health_check_user",

"webserver_host": "0.0.0.0",

"webservers": [

{

"target_port": 5432,

"listen_port": 25432

}

]

}

| Property | Default | Description |

|---|---|---|

database_host |

localhost |

[Required] The host on which the database to check is listening. |

health_check_user_password |

health_check_user |

[Required] The password of the health_check_user created on the target database. |

webserver_host |

0.0.0.0 |

[Required] The host on which the webserver spawned by the pgsqlchk service should listen on. Use 0.0.0.0 to listen on all host networks. |

webservers |

[{"target_port": 5432, "listen_port": 25432}] |

[One or More Required] A list of webservers to spawn. Each webserver defines a disparate target_port which maps to a PostgreSQL instance and a listen_port on which the webserver will listen on webserver_host. |

check_repmgr_status |

true |

If True, the check script will validate the local repmgr metadata by running repmgr to ensure only one primary node is running in the cluster. If two primary nodes are running, the response sent to the requesting endpoint will be a failure code, ensuring that the database won’t be used accidentally. |

repmgr_config_file |

/etc/repmgr/13/repmgr.conf |

The path to the RepMgr configuration file to use when check_repmgr_status is set to true. |

repmgr_binary |

/usr/pgsql-13/bin/repmgr |

The path to the RepMgr binary to use when check_repmgr_status is set to true. |

Start the pgsqlchk service:

service pgsqlchk start

Logs for the pgsqlchk service can be found in /var/log/pgsqlchk/pgsqlchk.log.

Configure HAProxy (SMS Nodes)

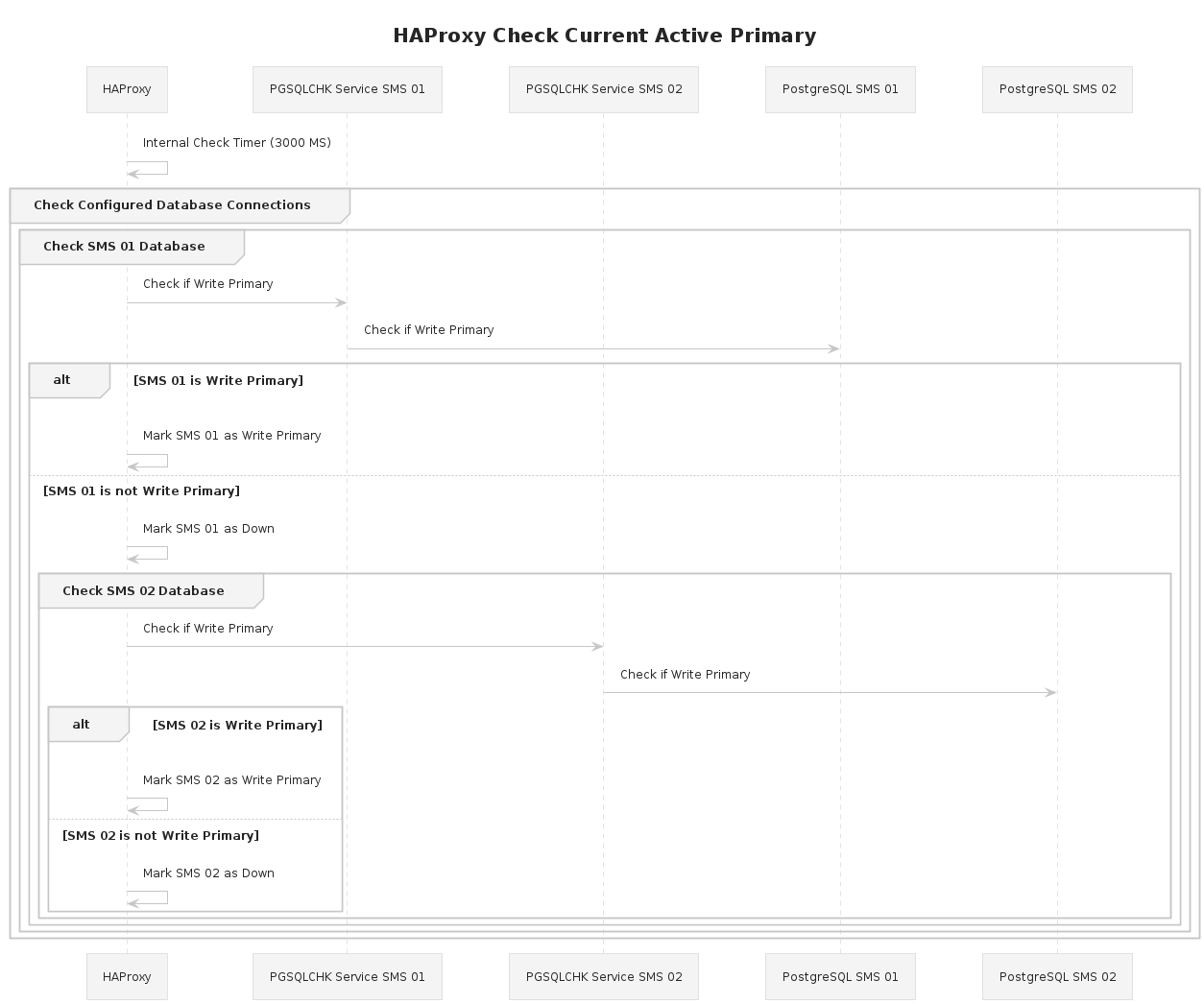

SMS nodes are configured in a way that allows the installed Jarvis instances to check for database availability and writability across the PostgreSQL cluster.

This follows the scenario:

-

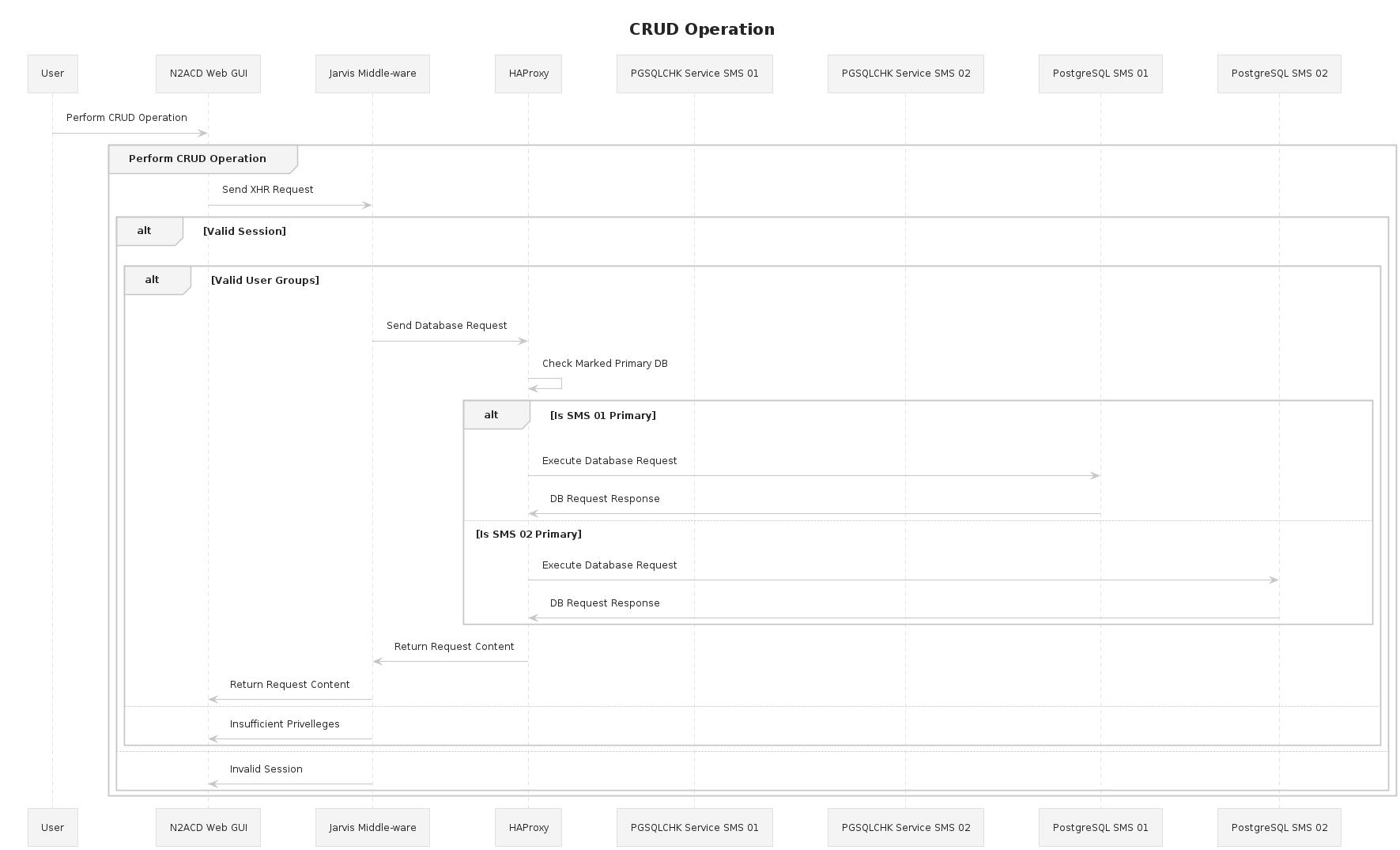

End user attempts to connect to a management GUI.

-

A load balancer or proxy routes traffic to one of the SMS servers that has an active Apache server.

-

The Apache server serves the application which connects back to the SMS node for the Jarvis / API layer.

-

Jarvis attempts to connect to the PostgreSQL instance.

- While normally the API middleware layer would connect on port

5432it instead connects to the port15432exposed by the HAProxy instance.

- While normally the API middleware layer would connect on port

-

HAProxy receives the connection attempt.

- The target connection port is configured with both the PostgreSQL instances of

sms-01andsms-02and maps to the25432port exposed by thepgsqlchkservice on each target node.psqlchkin turn determines which of the PostgreSQL instances is the write primary and which is the read secondary. psqlchkworks in tandem with PostgreSQL automated failover.

- The target connection port is configured with both the PostgreSQL instances of

Edit the configuration for HAProxy, backing up the base configuration

cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.install

nano /etc/haproxy/haproxy.cfg

Using the example configuration altering as required:

global

maxconn 1000

# Enable core dumps.

set-dumpable

defaults

log global

mode tcp

retries 2

timeout client 30m

timeout connect 4s

timeout server 30m

timeout check 5s

# Enable a stats module that can be access on localhost:7000.

# This stats page shows the current routing and availability of all connections.

listen stats

mode http

bind *:7000

stats enable

stats uri /

# Security can be enabled via auth directives.

# stats auth username:password

# Setup the PostgreSQL load balancing.

# All inbound connections to 15432 will be checked against the available instances via port 25432 routing accordingly.

listen Postgres-ACD

# Bind to the local port.

bind *:15432

# Perform a check for each configured server, ensuring that a valid response is returned.

option httpchk GET /

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

# Configure each server to balanced through and confirm status of.

# Port 25432 is the port XINETD is running on.

# This configuration allows HAProxy to check the status via XINETD rather than using the DB 5432 port directly.

server sms-01 sms-01:5432 maxconn 100 check port 25432

server sms-02 sms-02:5432 maxconn 100 check port 25432

Allow HAProxy SELinux connection permissions:

setsebool -P haproxy_connect_any=1

Allow connections to our query elements for our other SMS node and stats interface.

firewall-cmd --zone=public --add-port=7000/tcp --permanent

firewall-cmd --zone=public --add-port=15432/tcp --permanent

firewall-cmd --zone=public --add-port=25432/tcp --permanent

firewall-cmd --reload

Start HAProxy:

service haproxy start

Reconfigure all Jarvis instances to use port 15432 to connect to the database rather than directly via 5432.

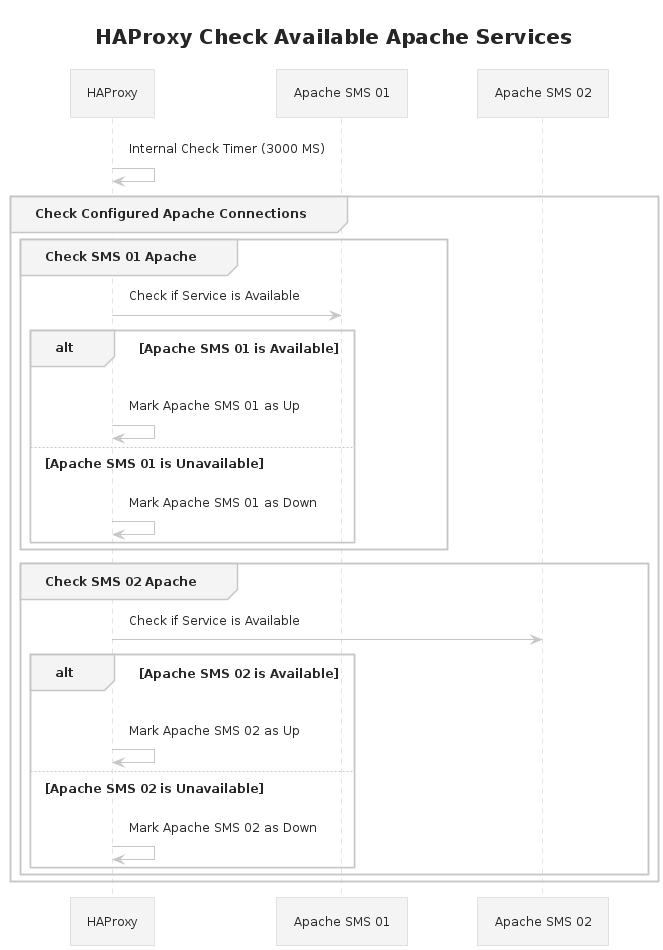

Configure HAProxy (FE Nodes)

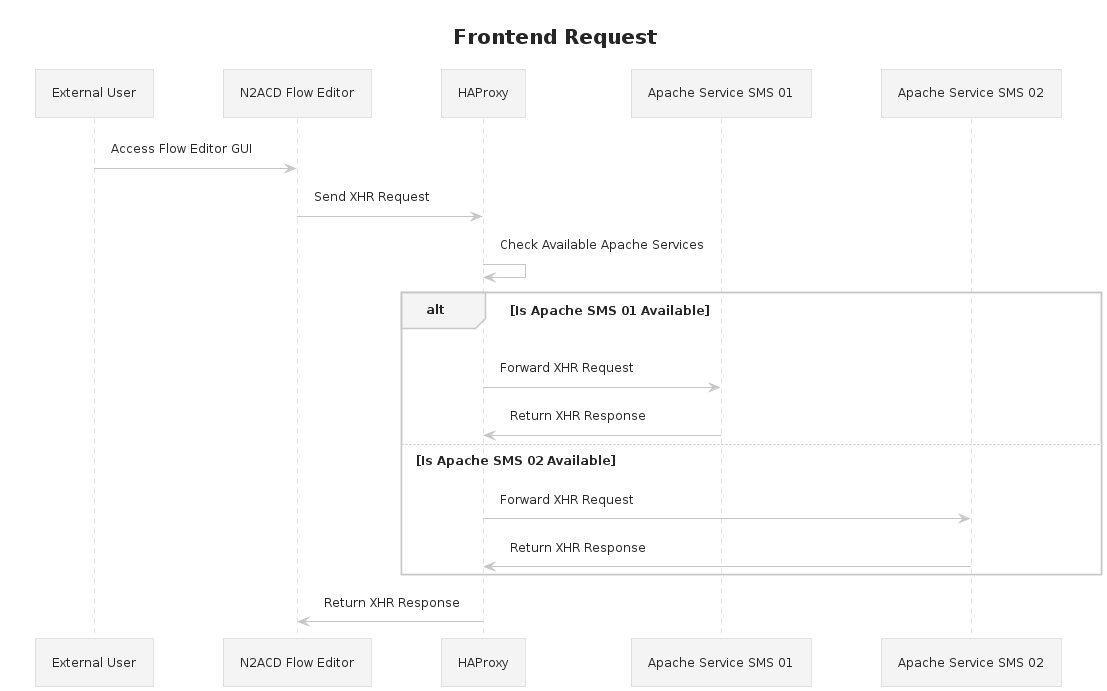

Our FE client nodes, primarily nodes that only install Apache which serves the client applications differ such that they simply proxy traffic to a Jarvis / API layer running on one of the SMS nodes.

This scenario reuses the end database resolution by directing the proxies Jarvis traffic at one of the valid SMS nodes that have a running Apache server.

This follows the scenario:

- User attempts to connect to a client GUI.

- A load balancer or proxy routes traffic to one of our client servers that has a running Apache server.

- The Apache server serves the application which connects back to the node for the Jarvis / API Layer.

- The node does not have a running Jarvis instance which is instead proxied by Apache towards the SMS nodes.

- HAProxy sits in between Apache which proxies the request and SMS servers. All requests to the Jarvis API will be intercepted; availability checks and routed accordingly.

- HAProxy checks which of the SMS nodes are available and routes the API request to an available node.

The configuration for FE nodes is significantly simpler; it needs to only check for a running Apache instance and proxy through traffic to an available node.

Edit the configuration for HAProxy, backup the base configuration:

cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.install

nano /etc/haproxy/haproxy.cfg

Using the example configuration altering as required:

global

# Maximum inbound connections.

maxconn 1024

defaults

log global

mode tcp

retries 2

timeout client 30m

timeout connect 4s

timeout server 30m

timeout check 5s

# Enable a stats module that can be access on localhost:7000.

# This stats page shows the current routing and availability of all connections.

listen stats

mode http

bind *:7000

stats enable

stats uri /

# Security can be enabled via auth directives.

# stats auth username:password

# Listen for HTTP requests, but only those that map to Jarvis. Everything else hits our current machine and is routed by Apache accordingly.

frontend http-in

# Listen on inbound port 80 requests.

bind *:80

# Set a boolean ACL if the current route maps to a Jarvis API.

acl has_jarvis_uri path_beg /n2acd-fe/jarvis-agent

# If the mapped boolean ACL is set use the configured Jarvis backend for routing.

use_backend jarvis if has_jarvis_uri

# Otherwise route traffic as standard to the Apache backend.

use_backend apache

# Setup the proxy to the Apache instance running on the machine for non Jarvis traffic.

backend apache

server localhost localhost:81 maxconn 100

# Setup the backend proxy for Jarvis, this will route to our Jarvis APIs and allow us to check for a node that is up.

backend jarvis

# Perform status checks on the configured servers.

option httpchk

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

# Configure each server to balanced through and confirm status of. This uses a basic HTTP check across port 80 to determine availability.

server sms-01 sms-01:80 maxconn 100 check

server sms-02 sms-02:80 maxconn 100 check

Allow HAProxy connection permissions:

setsebool -P haproxy_connect_any=1

Allow connections to the Stats URI and HTTP ports:

firewall-cmd --zone=public --add-port=7000/tcp --permanent

# Standard HTTP and Proxy Ports.

firewall-cmd --zone=public --add-port=80/tcp --permanent

firewall-cmd --zone=public --add-port=81/tcp --permanent

firewall-cmd --zone=public --add-port=443/tcp --permanent

firewall-cmd --zone=public --add-port=444/tcp --permanent

firewall-cmd --reload

Alter Apache to listen on an incremented port. This will let us route traffic with HAProxy to either the Jarvis API or to the local Apache instance.

nano /etc/httpd/conf/httpd.conf

Altering Listen to:

Listen 81

Restart Apache:

service httpd restart

Start HAProxy:

service haproxy start